Science

New Algorithm Accelerates Language Model Responses, Wins Award

A team of researchers led by Prof. Alex Lew has developed a groundbreaking algorithm that enhances the speed and accuracy of responses generated by language models. Their paper, titled “Fast Controlled Generation from Language Models with Adaptive Weighted Rejection Sampling,” was recognized as one of the four “Outstanding Papers” at the recent Conference on Language Modeling (COLM) held in Montreal.

The algorithm addresses a significant challenge in the use of large language models: ensuring that generated text adheres to specified constraints while maintaining efficiency. The judges of the conference praised the work, stating, “It solves a real problem, and it actually works: getting large language models to respect hard constraints, and do so fast.” This advancement is particularly valuable for applications requiring precise outputs, such as producing valid Python or JSON code, using simplified language, or even crafting poetry in the form of a Haiku.

Lew and his co-authors have introduced an innovative approach that applies constraints globally and efficiently. Rather than evaluating each potential next word, their method only checks a limited number of options, drastically reducing the computational burden. “I don’t need to run it on all 100,000 possible next words. I can run it maybe on three and still run this algorithm,” explained Lew, who serves as an assistant professor of computer science.

The algorithm employs techniques from computational statistics to maintain the integrity of the probability distribution over the responses generated by the language model. The judges noted, “This work shows how classical probabilistic inference techniques can solve modern LLM problems.”

The practical implications of this research are far-reaching. The paper illustrates the algorithm’s effectiveness across various applications, from programming to scientific research, including molecular synthesis. As an open-source initiative, the algorithm has been integrated into the GenLM toolkit, making it accessible for further development and experimentation by the research community.

This development marks a significant leap forward in the field of natural language processing, demonstrating how innovative approaches can enhance the capabilities of advanced technologies. With the continued evolution of language models, solutions like that proposed by Lew and his colleagues are essential for meeting the growing demands of precise and contextually relevant text generation.

-

Top Stories1 month ago

Top Stories1 month agoUrgent Update: Tom Aspinall’s Vision Deteriorates After UFC 321

-

Health2 months ago

Health2 months agoMIT Scientists Uncover Surprising Genomic Loops During Cell Division

-

Science1 month ago

Science1 month agoUniversity of Hawaiʻi Joins $25.6M AI Project to Enhance Disaster Monitoring

-

Top Stories2 months ago

Top Stories2 months agoAI Disruption: AWS Faces Threat as Startups Shift Cloud Focus

-

Science2 months ago

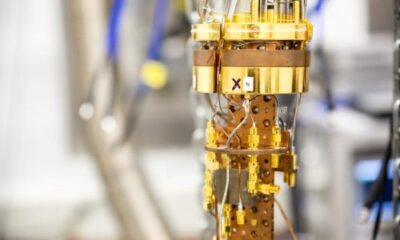

Science2 months agoTime Crystals Revolutionize Quantum Computing Potential

-

Entertainment2 months ago

Entertainment2 months agoDiscover the Full Map of Pokémon Legends: Z-A’s Lumiose City

-

World2 months ago

World2 months agoHoneywell Forecasts Record Business Jet Deliveries Over Next Decade

-

Top Stories2 months ago

Top Stories2 months agoGOP Faces Backlash as Protests Surge Against Trump Policies

-

Politics2 months ago

Politics2 months agoJudge Signals Dismissal of Chelsea Housing Case Citing AI Flaws

-

Entertainment2 months ago

Entertainment2 months agoParenthood Set to Depart Hulu: What Fans Need to Know

-

Sports2 months ago

Sports2 months agoYoshinobu Yamamoto Shines in Game 2, Leading Dodgers to Victory

-

Health2 months ago

Health2 months agoMaine Insurers Cut Medicare Advantage Plans Amid Cost Pressures