Business

Quantum Computers: Trusting Their Answers Amid Reliability Challenges

Recent advancements in quantum computing highlight both the immense potential and the persistent reliability challenges facing the technology. Quantum computers can solve complex problems at speeds unattainable by classical computers, but their outputs remain vulnerable to errors and noise. Current research focuses on improving the reliability of these systems, striving towards a future where they can be fully trusted for practical applications.

Understanding Quantum Computing’s Power and Limitations

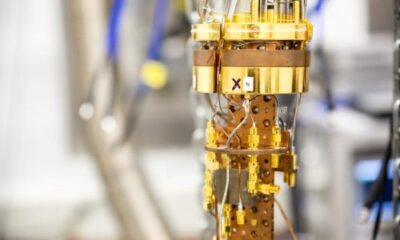

Quantum computers leverage the unique properties of qubits—the fundamental units of data in quantum systems. Unlike classical bits, which can exist in one of two states (0 or 1), qubits can exist in multiple states simultaneously. This allows quantum computers to explore numerous possibilities at once, significantly accelerating problem-solving capabilities. For instance, they can perform calculations that would take classical computers thousands of years to complete.

Despite this power, quantum computers, known as noisy intermediate-scale quantum (NISQ) devices, still face significant reliability issues. Qubits are particularly fragile; they are susceptible to disturbances such as temperature fluctuations and electromagnetic interference. These disturbances can cause decoherence, where qubits lose their quantum properties, ultimately impacting the accuracy of computations. The operations performed by quantum gates can also introduce errors, further complicating the reliability of the results.

Enhancing Reliability Through Research

To address the reliability of quantum answers, researchers are pursuing various methods. One prominent technique is quantum error correction, which involves distributing a single logical qubit across multiple physical qubits. This redundancy allows errors to be identified and corrected before they compromise the outcome. However, implementing this method requires a large number of qubits, presenting a significant challenge in the current technological landscape.

In the near term, quantum devices are increasingly reliant on error mitigation techniques. These methods reduce the impact of noise without necessitating the extensive resources needed for comprehensive error correction. Techniques such as statistical methods and adaptive pulse shaping are being employed to enhance gate operation reliability, thereby improving the accuracy of results.

Verification of quantum computing outputs also poses a significant challenge. The “black box” problem arises because many solutions generated by quantum computers cannot be verified by classical systems. To combat this, scientists have developed specialized protocols that incorporate “trap” calculations within complex tasks. If a quantum computer fails the trap, the results are deemed less reliable. Additionally, statistical sampling techniques can check parts of the outputs for consistency.

A noteworthy example is research conducted at Swinburne University, where scientists developed a classical method to validate Gaussian Boson Sampling experiments. This technique can verify results in minutes that would typically take classical computers thousands of years to process.

Quantum Advantage and Future Trust

The concept of quantum advantage serves as a practical measure of trust in quantum computing. It indicates a quantum computer’s ability to solve real-world problems more efficiently than classical counterparts. In contrast, quantum supremacy refers to a quantum computer’s ability to tackle problems beyond the reach of classical systems. Full user trust in quantum computing will depend on its capacity to consistently deliver verified and practical solutions.

Major players in the industry, such as IBM and Google, are actively working to enhance both the hardware and software aspects of quantum computing. Significant improvements have been observed in qubit coherence and a reduction in gate errors. For instance, Google’s Willow chip has demonstrated low error rates even while managing over 100 qubits. As advancements continue, the reliability of quantum solutions is expected to improve, particularly in fields like optimization, cryptography, and material simulations.

Despite these advancements, quantum computers are not yet the perfect solution. Their outputs remain probabilistic, and classical methods cannot fully verify solutions to complex problems. The development of fully fault-tolerant quantum computers capable of automatically correcting all errors is still years away. Until then, careful verification and cautious interpretation of results will remain essential.

As the technology matures, confidence in quantum computing is anticipated to grow, potentially leading to new applications across science, industry, and research. Nonetheless, while quantum computers are powerful tools, they should not be trusted blindly. Verification methods and ongoing hardware improvements are crucial for ensuring that their answers become increasingly reliable over time.

-

Top Stories1 month ago

Top Stories1 month agoUrgent Update: Tom Aspinall’s Vision Deteriorates After UFC 321

-

Health1 month ago

Health1 month agoMIT Scientists Uncover Surprising Genomic Loops During Cell Division

-

Science4 weeks ago

Science4 weeks agoUniversity of Hawaiʻi Joins $25.6M AI Project to Enhance Disaster Monitoring

-

Top Stories1 month ago

Top Stories1 month agoAI Disruption: AWS Faces Threat as Startups Shift Cloud Focus

-

Science2 months ago

Science2 months agoTime Crystals Revolutionize Quantum Computing Potential

-

World2 months ago

World2 months agoHoneywell Forecasts Record Business Jet Deliveries Over Next Decade

-

Entertainment1 month ago

Entertainment1 month agoDiscover the Full Map of Pokémon Legends: Z-A’s Lumiose City

-

Top Stories2 months ago

Top Stories2 months agoGOP Faces Backlash as Protests Surge Against Trump Policies

-

Entertainment2 months ago

Entertainment2 months agoParenthood Set to Depart Hulu: What Fans Need to Know

-

Politics2 months ago

Politics2 months agoJudge Signals Dismissal of Chelsea Housing Case Citing AI Flaws

-

Sports2 months ago

Sports2 months agoYoshinobu Yamamoto Shines in Game 2, Leading Dodgers to Victory

-

Health2 months ago

Health2 months agoMaine Insurers Cut Medicare Advantage Plans Amid Cost Pressures