Health

OpenAI Reveals Mental Health Challenges Among ChatGPT Users

OpenAI has released new data indicating that a significant number of its ChatGPT users exhibit signs of mental health challenges. According to the report published on Monday, approximately 10% of the global population engages with ChatGPT weekly. Among these users, the company found that 0.07% display signs of serious mental health emergencies, including psychosis or mania, while 0.15% express risks related to self-harm or suicide.

The data suggests that nearly three million users could be affected, with specific figures revealing that roughly 560,000 users weekly show signs of mental health emergencies. OpenAI reported handling around 18 billion messages each week, which translates to approximately 1.8 million messages indicating psychosis or mania.

Improving User Support

In response to these findings, OpenAI has collaborated with 170 mental health experts to enhance the chatbot’s ability to respond to users in distress. The company claims to have achieved a reduction of 65-80% in responses that do not meet safety standards. ChatGPT is now better equipped to de-escalate conversations and guide users toward professional help and crisis hotlines when necessary.

Additionally, the chatbot has introduced more frequent reminders for users to take breaks during extended interactions. While these measures aim to support users, OpenAI emphasizes that it cannot force users to contact support or restrict access to the chatbot.

Another important area of focus has been the emotional reliance some users may develop toward AI. OpenAI estimates that 0.15% of its users and 0.03% of messages indicate heightened emotional attachment to ChatGPT, equating to around 1.2 million users and 5.4 million messages weekly.

Addressing Serious Concerns

The urgency of these improvements has been underscored by tragic incidents, including the death of a 16-year-old who reportedly sought advice from ChatGPT on self-harm. This incident has raised serious questions about the potential consequences of the chatbot’s responses. OpenAI’s ongoing efforts to implement better safety measures were partly motivated by such concerns.

While OpenAI has tightened regulations for underage users, it has also introduced features allowing adults to engage ChatGPT in more personalized interactions, including creative writing. Critics argue that these features could inadvertently increase emotional reliance on the chatbot, complicating the balance between user engagement and mental health safety.

OpenAI’s latest report highlights the complexity of managing mental health issues in digital interactions. The company’s commitment to improving its service reflects a growing recognition of the role technology plays in mental health and the necessity for responsible AI development.

-

Top Stories1 month ago

Top Stories1 month agoUrgent Update: Tom Aspinall’s Vision Deteriorates After UFC 321

-

Health1 month ago

Health1 month agoMIT Scientists Uncover Surprising Genomic Loops During Cell Division

-

Science4 weeks ago

Science4 weeks agoUniversity of Hawaiʻi Joins $25.6M AI Project to Enhance Disaster Monitoring

-

Top Stories1 month ago

Top Stories1 month agoAI Disruption: AWS Faces Threat as Startups Shift Cloud Focus

-

Science2 months ago

Science2 months agoTime Crystals Revolutionize Quantum Computing Potential

-

World2 months ago

World2 months agoHoneywell Forecasts Record Business Jet Deliveries Over Next Decade

-

Entertainment1 month ago

Entertainment1 month agoDiscover the Full Map of Pokémon Legends: Z-A’s Lumiose City

-

Top Stories2 months ago

Top Stories2 months agoGOP Faces Backlash as Protests Surge Against Trump Policies

-

Entertainment2 months ago

Entertainment2 months agoParenthood Set to Depart Hulu: What Fans Need to Know

-

Politics2 months ago

Politics2 months agoJudge Signals Dismissal of Chelsea Housing Case Citing AI Flaws

-

Sports2 months ago

Sports2 months agoYoshinobu Yamamoto Shines in Game 2, Leading Dodgers to Victory

-

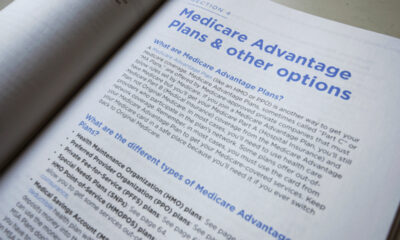

Health2 months ago

Health2 months agoMaine Insurers Cut Medicare Advantage Plans Amid Cost Pressures