Science

Experts Explore Human-AI Collaboration for Better Diagnoses

On October 23, 2025, the Whiting School of Engineering’s Department of Computer Science welcomed Aaron Roth, a professor of computer and cognitive science from the University of Pennsylvania, to discuss his research on enhancing collaboration between humans and artificial intelligence (AI). His lecture, titled “Agreement and Alignment for Human-AI Collaboration,” highlighted findings from three academic papers and delved into how AI can assist in critical decision-making processes, particularly in healthcare.

Understanding Human-AI Interaction

With the increasing integration of AI across various sectors, the need to understand its role in human decision-making is crucial. Roth illustrated this need through the example of AI’s potential to aid doctors in diagnosing patients. In this scenario, the AI generates predictions based on various data points, including previous diagnoses and patient symptoms. The physician then evaluates these predictions, drawing from their own expertise.

Should a disagreement arise between the AI’s assessment and the doctor’s judgment, Roth explained that both parties can reassess their positions through a finite number of iterations. This method allows for a synthesis of knowledge, where the AI and doctor integrate their unique insights, ultimately leading to a consensus. Roth referred to this concept as a common prior, where both parties share similar foundational assumptions, even if their specific information differs.

The framework Roth described aligns with what he terms Perfect Bayesian Rationality. In this model, each party is aware of the other’s knowledge base yet lacks complete insight into its specifics. While this model aims for optimal agreement, Roth acknowledged its challenges, such as the difficulty of establishing a common prior and the complexities arising from multi-dimensional topics like hospital diagnostic codes.

The Role of Calibration and Market Dynamics

Roth emphasized the importance of calibration in reaching agreements between AI and human stakeholders. He illustrated this concept using weather forecasting, where calibrating predictions can enhance the accuracy of forecasts. In the context of doctor-AI collaboration, calibration involves the AI refining its predictions based on the doctor’s responses. For example, if an AI estimates a 40% risk associated with a treatment and the physician suggests a 35% risk, the AI’s subsequent assessments would adjust to lie between these two figures, facilitating a quicker consensus.

While the discussion primarily focused on scenarios where both AI and human parties share a common goal, Roth also acknowledged potential conflicts of interest. For instance, if an AI model is developed by a pharmaceutical company, its recommendations may inadvertently favor the company’s products. To mitigate this risk, Roth proposed that doctors consult multiple AI models from various drug companies, encouraging competition among providers. This strategy could lead to more aligned and unbiased AI models, as each company strives to create a model that aligns with physician preferences.

In closing, Roth addressed the notion of real probabilities—those that accurately reflect the complexities of the world. Although precise probabilities are ideal, he noted that in many cases, it suffices to ensure that probabilities are unbiased under specific conditions. By leveraging data effectively, healthcare professionals and AI can collaborate to enhance diagnostic accuracy and treatment decisions.

The exploration of human-AI collaboration remains a critical area of research, particularly as AI continues to permeate various aspects of society. Roth’s insights into agreement and alignment provide a foundation for future advancements in this field.

-

Top Stories1 month ago

Top Stories1 month agoUrgent Update: Tom Aspinall’s Vision Deteriorates After UFC 321

-

Health1 month ago

Health1 month agoMIT Scientists Uncover Surprising Genomic Loops During Cell Division

-

Science4 weeks ago

Science4 weeks agoUniversity of Hawaiʻi Joins $25.6M AI Project to Enhance Disaster Monitoring

-

Top Stories1 month ago

Top Stories1 month agoAI Disruption: AWS Faces Threat as Startups Shift Cloud Focus

-

Science2 months ago

Science2 months agoTime Crystals Revolutionize Quantum Computing Potential

-

World2 months ago

World2 months agoHoneywell Forecasts Record Business Jet Deliveries Over Next Decade

-

Entertainment1 month ago

Entertainment1 month agoDiscover the Full Map of Pokémon Legends: Z-A’s Lumiose City

-

Top Stories2 months ago

Top Stories2 months agoGOP Faces Backlash as Protests Surge Against Trump Policies

-

Entertainment2 months ago

Entertainment2 months agoParenthood Set to Depart Hulu: What Fans Need to Know

-

Politics2 months ago

Politics2 months agoJudge Signals Dismissal of Chelsea Housing Case Citing AI Flaws

-

Sports2 months ago

Sports2 months agoYoshinobu Yamamoto Shines in Game 2, Leading Dodgers to Victory

-

Health2 months ago

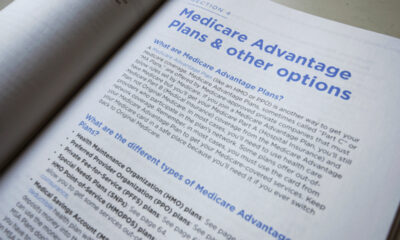

Health2 months agoMaine Insurers Cut Medicare Advantage Plans Amid Cost Pressures