Top Stories

Meta Faces Lawsuit Over Alleged Sex-Trafficking Policy on Instagram

UPDATE: A shocking new lawsuit filed against Meta, the owner of Facebook and Instagram, claims the company operated a “17x” policy that allowed sex traffickers to post sexual solicitation content up to 16 times before facing account suspension. This alarming allegation underscores a broader assertion that Meta prioritized profit over the safety of children, as the lawsuit progresses in the Oakland U.S. District Court.

The filing, submitted by children, parents, and school districts—including California—alleges that tech giants like Meta, Google’s YouTube, Snapchat’s parent company Snap, and TikTok have intentionally designed their platforms to addict children, knowing the potential harm. Plaintiffs are demanding unspecified damages and a court order to cease what they describe as “harmful conduct” and to issue warnings about the addictive nature of these social media products.

Internal communications referenced in the lawsuit reveal that the Instagram account recommendation feature reportedly suggested nearly 2 million minors to adults looking to groom children. Alarmingly, over 1 million inappropriate adult accounts were presented to teenage users in a single day in 2022. A Meta employee allegedly stated that Facebook’s recommendation system was responsible for 80% of inappropriate adult-minor connections.

The plaintiffs argue that despite earning $62.4 billion in profit last year, Meta has consistently neglected the safety of its young users. They claim, “Meta simply refused to invest resources in keeping kids safe,” indicating a culture that prioritizes engagement over safety.

A Meta spokesperson vehemently denied the accusations, stating, “We strongly disagree with these allegations, which rely on cherry-picked quotes and misinformed opinions.” They emphasized ongoing efforts to improve safety, including the introduction of Teen Accounts with built-in protections.

However, the lawsuit points to internal reports suggesting Meta has not adequately addressed issues of child exploitation on its platforms. For instance, Instagram allegedly lacked a reporting mechanism for child sexual abuse material until March 2020. Furthermore, when the company’s artificial intelligence tools identified such material with 100% accuracy, it claims these were not automatically deleted due to fears of “false positives.”

The lawsuit is still in the evidence-gathering phase, but it paints a grim picture of how social media platforms have infiltrated classrooms and disrupted educational environments, contributing to a mental health crisis among youth. Internal messages from Meta researchers reportedly described the company’s products as being akin to “pushers,” enticing teens despite the adverse effects.

In a series of congressional testimonies, Meta CEO Mark Zuckerberg insisted he did not set goals to increase user engagement time. Contradictory evidence from internal communications suggests otherwise, revealing that increased user time, especially among teens, was a priority.

As this lawsuit unfolds, it highlights the urgent need for accountability in how tech companies manage the safety of their youngest users. The implications of these allegations, if proven true, could lead to significant changes in how social media platforms operate and how they protect minors.

The next steps in the legal proceedings could set monumental precedents for the tech industry as it grapples with the balance between profit and user safety. Stay tuned for further updates on this developing story as authorities and advocacy groups continue to push for stricter regulations on social media platforms.

-

Top Stories1 month ago

Top Stories1 month agoUrgent Update: Tom Aspinall’s Vision Deteriorates After UFC 321

-

Health1 month ago

Health1 month agoMIT Scientists Uncover Surprising Genomic Loops During Cell Division

-

Science4 weeks ago

Science4 weeks agoUniversity of Hawaiʻi Joins $25.6M AI Project to Enhance Disaster Monitoring

-

Top Stories1 month ago

Top Stories1 month agoAI Disruption: AWS Faces Threat as Startups Shift Cloud Focus

-

Science2 months ago

Science2 months agoTime Crystals Revolutionize Quantum Computing Potential

-

World2 months ago

World2 months agoHoneywell Forecasts Record Business Jet Deliveries Over Next Decade

-

Entertainment1 month ago

Entertainment1 month agoDiscover the Full Map of Pokémon Legends: Z-A’s Lumiose City

-

Top Stories2 months ago

Top Stories2 months agoGOP Faces Backlash as Protests Surge Against Trump Policies

-

Entertainment2 months ago

Entertainment2 months agoParenthood Set to Depart Hulu: What Fans Need to Know

-

Politics2 months ago

Politics2 months agoJudge Signals Dismissal of Chelsea Housing Case Citing AI Flaws

-

Sports2 months ago

Sports2 months agoYoshinobu Yamamoto Shines in Game 2, Leading Dodgers to Victory

-

Health2 months ago

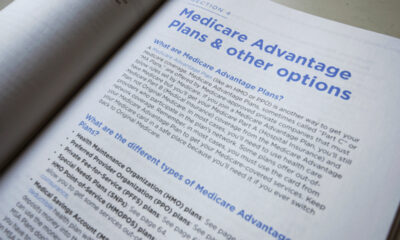

Health2 months agoMaine Insurers Cut Medicare Advantage Plans Amid Cost Pressures